Hardware-Enforced AI Security: Fortifying Your Models from the Ground Up

Hardware-Enforced AI Security: Fortifying Your Models from the Ground Up

I’ve spent a lot of time thinking about the layers of defence we keep piling onto AI systems—secure coding, locked-down pipelines, adversarial testing, red-teaming, the whole lot. All of it matters. But there’s a blunt truth underneath the lot of it: if the underlying hardware and execution environment can’t be trusted, you’re ultimately building a very clever house on sand.

That’s why hardware-enforced AI security is becoming non-negotiable for certain classes of model. Not every chatbot needs an enclave and a chain of attestation, obviously. But if you’ve poured months into training a model that represents real competitive advantage—or worse, you’re using it to make decisions in regulated or safety-adjacent environments—then protecting the model’s weights, the inference path, and the keys that govern access isn’t a “nice extra”. It’s table stakes.

Picture the scene. You’ve trained a model, tuned it, deployed it, and it’s driving an important workflow. Then an adversary manages to exfiltrate the weights—your model’s actual “brain”—or quietly tampers with the inference process so it behaves differently under specific conditions. That’s not just an incident report and a bad week. That can be intellectual property theft, manipulated decisions, data integrity problems, or a full-on loss of trust in the system’s output. Once you can’t prove the model is behaving as intended, you’ve got an expensive liability sitting in production.

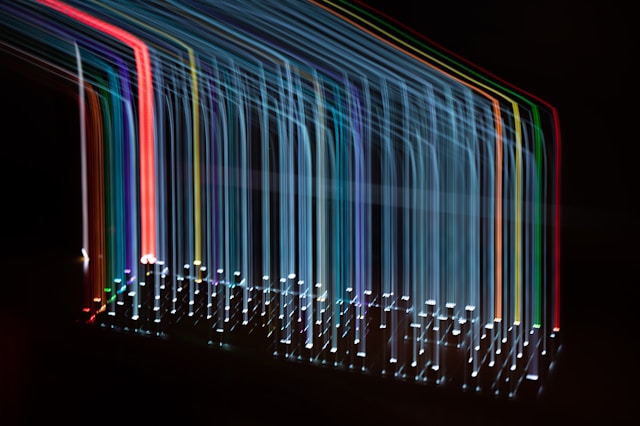

This is where hardware security earns its keep. Technologies like Trusted Execution Environments (TEEs), secure enclaves, and Hardware Security Modules (HSMs) don’t replace software security. They make it harder for software compromise to matter. They give you a “vault” at the lowest practical layer, so even if the host is untrusted, the most sensitive parts of the system aren’t casually reachable.

Enclave-based inference: keeping the “brain” in a vault

One of the most compelling uses of TEEs in AI is enclave-based inference. A TEE creates an isolated execution space protected by hardware, which means the code and data inside that enclave is shielded even if the operating system, the hypervisor, or other surrounding components are compromised.

For AI inference, that has three big implications.

First, model confidentiality. If the model weights live and execute inside the enclave, stealing them becomes significantly harder. Even with strong access to the host, the attacker shouldn’t be able to simply dump memory and walk off with your intellectual property.

Second, integrity. You’re not just protecting secrets, you’re protecting behaviour. If inference happens inside an enclave and you’ve got the right attestation and control around what runs there, it becomes far more difficult for an attacker to inject code into the inference path or tamper with what the model is doing mid-flight.

Third, privacy for sensitive inference workloads. In some environments—healthcare is the obvious one—you might want the input data to be processed without being exposed to the host at all. Enclaves can help you keep raw inputs protected while still producing useful outputs. It doesn’t magically make privacy “done”, but it gives you another layer that’s very hard to fake.

You’ll hear TEEs discussed in the context of technologies like Intel SGX and AMD SEV. The key point isn’t which vendor badge is on it; the key point is that cloud and on-prem platforms are increasingly making “confidential compute” a practical option, which means architects can now actually design for it rather than treating it as a research topic.

Hardware-rooted key management: where the real power sits

If TEEs are the vault for execution, HSMs are the vault for keys. And keys are where the real power sits. If an attacker gets your keys, they don’t need to break your crypto—they just use it.

In AI systems, hardware-rooted key management becomes important surprisingly quickly. If you’re encrypting model artefacts at rest (and you should be), then the encryption keys need to be protected like crown jewels. If you’re signing model releases so you can prove what was deployed and who approved it, the signing keys need to be protected even more. Otherwise you’ve built a beautiful supply chain story that collapses the moment someone steals a private key and starts shipping “official” updates.

HSMs exist for that exact problem. They’re designed so keys are generated, stored, and used inside a tamper-resistant boundary, and cryptographic operations happen within that boundary. The private material is far less likely to end up in logs, memory dumps, developer laptops, or “temporary” CI variables that mysteriously become permanent.

In cloud land, most organisations won’t rack their own HSMs anymore, but the pattern stays the same: use a hardware-backed keystore for anything that would cause catastrophic impact if it leaked, and build your model deployment process so it relies on that chain of trust rather than good intentions.

Side-channels: the awkward footnote you can’t ignore

Hardware security is not a magic cloak. Even with TEEs and HSMs, you still need to be honest about the threat model, because sophisticated adversaries don’t always come through the front door.

Side-channel attacks are the classic example. Instead of exploiting a bug, the attacker tries to infer secrets from physical or microarchitectural “leakage” like timing differences, cache behaviour, or other observable signals. This is the kind of thing that feels academic until it isn’t—especially when you’re dealing with high-value model weights or high-value keys.

Mitigations tend to be a mix of disciplined implementation and practical awareness. Constant-time approaches help reduce timing leakage in sensitive operations. Some designs introduce noise or randomisation to make signals harder to interpret. And, at the higher level, you want to be very deliberate about what you assume the cloud provider is protecting you from versus what you still need to design around in your own code and deployment patterns.

The broader point is simple: even “secure enclaves” live on real CPUs with real microarchitecture, and microarchitecture can leak. If the model is valuable enough, the attacker will get creative enough.

Hardware-enforced security isn’t “extra”, it’s a design choice

The journey to resilient AI systems is multi-layered. Strong software security is still the foundation—secure SDLC, proper CI/CD controls, sensible access management, continuous monitoring, and adversarial testing all remain essential.

But as AI models become more valuable and more central to business operations, hardware-enforced security becomes the layer that makes the rest of your story believable. It’s what helps you say, with a straight face, “Even if parts of the platform are compromised, the model and its keys remain protected.”

At the end of the day, this is about building AI systems that can be trusted not because we hope the host is clean, but because we’ve designed the system so the host doesn’t get to see or control the most sensitive bits in the first place.

That’s what it means to fortify from the ground up.