Zero Trust for AI: Securing Intelligence in a Distributed World

Traditional perimeter security has been dying for years. AI just accelerates the funeral.

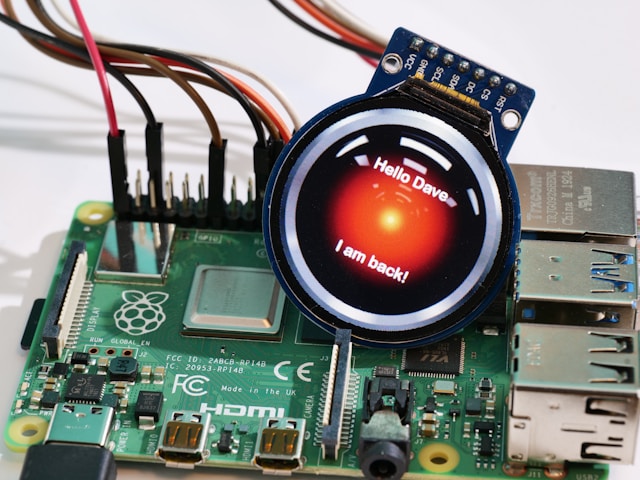

The reason is simple: modern AI isn’t a single application sitting behind a firewall. It’s a distributed system of systems—data pipelines, feature stores, training jobs, model registries, inference endpoints, retrieval services, agent tools, serverless glue, observability layers—often spread across cloud accounts, regions, vendors, and increasingly, edge devices. In that world the idea of a “trusted internal network” stops being a control and starts being a comforting story.

If an attacker gets a foothold anywhere inside that story—an over-permissioned service account, a compromised CI runner, a leaked token in a notebook, a misconfigured endpoint—the blast radius can be spectacular. Not because AI is magic, but because AI systems are connected, privileged, and hungry for data. Lateral movement becomes an architectural feature.

This is why Zero Trust isn’t a branding exercise for AI. It’s the minimum viable security stance for distributed intelligence.

[Read More . . .]